Are you starting on your deep learning journey and things are processing slow!! Or you are already on one and don’t want to buy costly computers for your deep learning endeavors or the cloud computation resources like AWS ,Azure or GCP seems too complicated? Well, Google’s collaboratory is pretty easy and handy tool which will get you started right away!!

So what is Google’s Collaboratory?

It is a free cloud based service by Google for developers to experiment with their snippets of python codes. It is a Jupyter notebook type interface which you can use just as your standard python compiler but with more features. Also on top of that it provides you a free Tesla K80 GPU for a run-time of 12hours and after that you only have to re-connect with the service again with a single click!! So in short, unless you have a huge task which would take huge resources and time, you can use Google collab for a faster runtime.

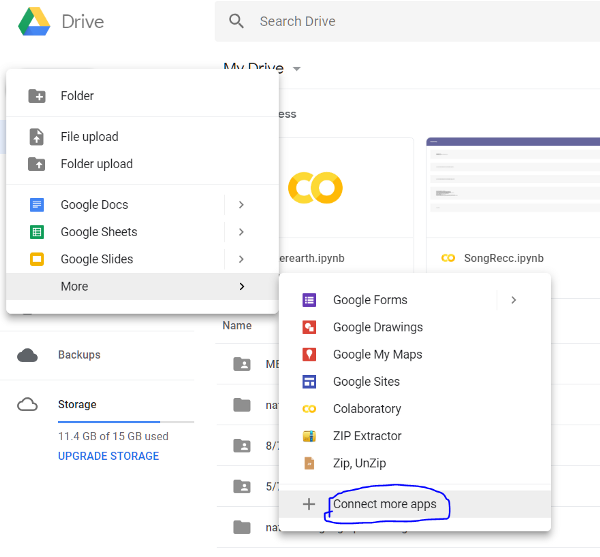

Getting Started

Head to your google drive-> click on “New(+)” sign -> click on “More” -> click on “Connect more apps”-> search “collaboratory” and add it. (One time process) You can create a new notebook(compiler) by-> click on “New(+)” sign -> click on “more” -> click on “collaboratory”.

(It will be present once you successfully added it in previous step).

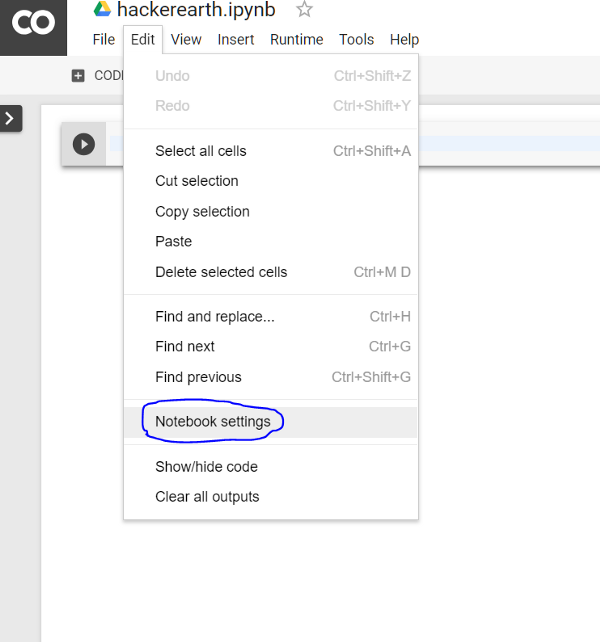

Adding free GPU

On the notebook interface-> click “Edit”-> click “Notebook Settings”-> select “GPU” as “Hardware Accelerator”.

In the notebook interface you can write your python codes in each cell(blocks) and hit Shift+Enter to run that cell individually. Unlike your standard python compiler, the execution of your script will not necessarily be top-down. The execution order will be mentioned as a number on the side of each cell.

For more info. on why to use GPU check- https://www.analyticsvidhya.com/blog/2017/05/gpus-necessary-for-deep-learning/

Adding Data and Dependencies

For your Machine Learning projects, you will need data and dependencies(libraries).

For installing any libraries just run this in any cell with the library name as each cell also act as a bash interpreter-

!pip install numpy

For getting your data to work with, if you have it on any server with a direct download link you can run-

!wget — no-check-certificate https://your_download_link

Remember this download is done by Google servers, so it will not be dependent on your internet speed. So it is a faster method then uploading your data to use. Try to get a download link.

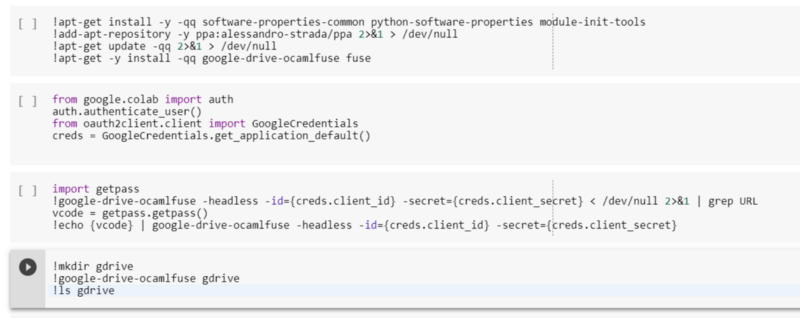

If you want to use your Google drive for getting data you need to link your google drive to collaboratory every time you open a notebook. Simply run this and follow the given instruction-

!apt-get install -y -qq software-properties-common python-software-properties module-init-tools

!add-apt-repository -y ppa:alessandro-strada/ppa 2>&1 > /dev/null

!apt-get update -qq 2>&1 > /dev/null

!apt-get -y install -qq google-drive-ocamlfuse fuse

from google.colab import auth

auth.authenticate_user()

from oauth2client.client import GoogleCredentials

creds = GoogleCredentials.get_application_default()

import getpass

!google-drive-ocamlfuse -headless -id={creds.client_id} -secret={creds.client_secret} < /dev/null 2>&1 | grep URL

vcode = getpass.getpass()

!echo {vcode} | google-drive-ocamlfuse -headless -id={creds.client_id} -secret={creds.client_secret}

!mkdir gdrive

!google-drive-ocamlfuse gdrive

!ls gdrive

Now you can use your bash commands like- ls , cd, unzip , makedir etc. to navigate further into your gdrive. Also if you want your downloaded data to be stored into your gdrive perform these steps before wget step.

Some useful Hacks

Cloning Github repo

!git clone https://github.com/wxs/keras-mnist-tutorial.git

Check GPU alloted status

import tensorflow as tf

tf.test.gpu_device_name()

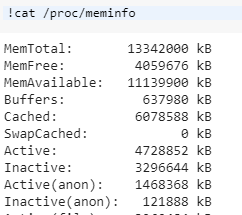

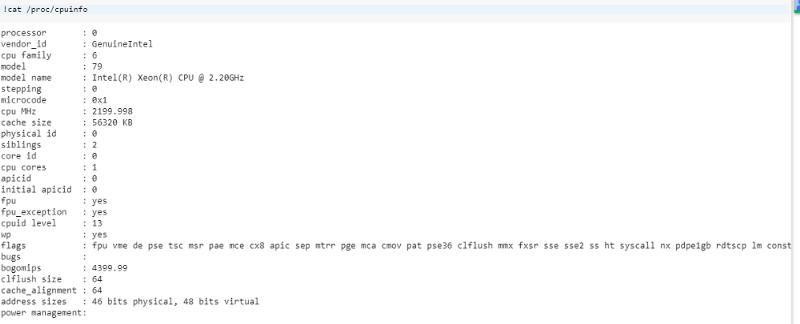

Check RAM and CPU status

!cat /proc/meminfo

!cat /proc/cpuinfo

Change working directory

!ls

Conclusion

It’s a great tool and in the early days I found myself using it very often, but I encourage others to explore AWS, GCP and Microsoft Azure also, as they will surely give you exposure to many of their awesome services and accelerate your data scientist track. If you are a student check https://education.github.com/pack for getting free student credits for some paid services.